Show-1: Marrying Pixel and Latent Diffusion Models for Text-to-Video Generation

Show Lab, National University of Singapore

* Equal Contribution

✉ Corresponding Author

"Astronaut in a Jungle" by Syd Mead, cold color palette, muted colors, detailed.

A beautiful fluffy domestic hen sitting on white eggs in a brown nest, eggs are under the hen.

A fierce dragon breathing fire.

A lion made entirely of autumn leaves.

A panda taking a selfie.

A rainbow colored giraffe walks on the moon.

A tiny finch on a branch with spring flowers on background.

Cute small dog sitting in a movie theater eating popcorn watching a movie.

Lamborghini huracan car covered in flames, night.

A wolf drinking coffee in a cafe.

A panda besides the waterfall is holding a sign that says "Show Lab".

A robot cat eating spaghetti.

A tiger coming out of a television.

Alien enjoys food.

An elephant is walking under the sea.

Female bunny as a chef, making cakes in a modern kitchen.

King Lion sitting on throne, with crown, in jungle.

Shot of Vaporwave fashion dog in miami.

A cherry blossom tree in full bloom amidst an arctic tundra showering petals on a polar bear.

A panda besides the waterfall is holding a sign that says "Show 1".

A superhero dog wearing a red cape, flying in the sky.

Elon Musk selling watermelon on the street.

Donald Trump made out of fruits and vegetables by giuseppe arcimboldo, oil on canvas.

Toad practicing karate.

Motorcyclist with space suit riding on moon with storm and galaxy in background.

Close up of mystic cat, like a buring phoenix, red and black colors.

A unicorn in a magical grove, extremely detailed.

A burning lamborghini on the road.

Giant octopus invades new york city.

An isolated iron lighthouse shining light out to sea at night as it sits on a rocky stone island being battered by huge ocean waves, smoky orange clouds filling the sky.

Abstract

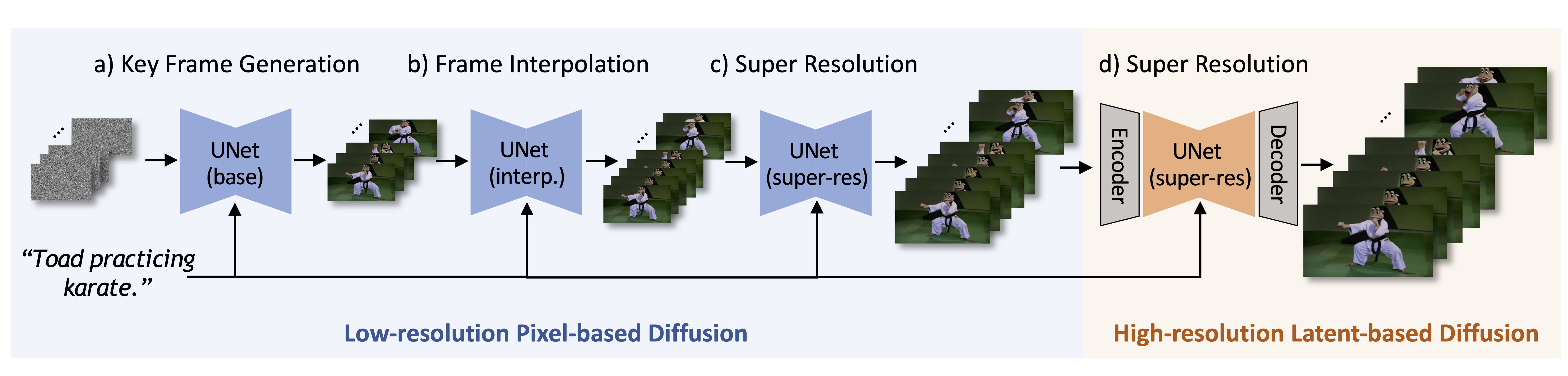

Significant advancements have been achieved in the realm of large-scale pre-trained text-to-video Diffusion Models (VDMs). However, previous methods either rely solely on pixel-based VDMs, which come with high computational costs, or on latent-based VDMs, which often struggle with precise text-video alignment. In this paper, we are the first to propose a hybrid model, dubbed as Show-1, which marries pixel-based and latent-based VDMs for text-to-video generation. Our model first uses pixel-based VDMs to produce a low-resolution video of strong text-video correlation. After that, we propose a novel expert translation method that employs the latent-based VDMs to further upsample the low-resolution video to high resolution. Compared to latent VDMs, Show-1 can produce high-quality videos of precise text-video alignment; Compared to pixel VDMs, Show-1 is much more efficient (GPU memory usage during inference is 15G vs 72G). We also validate our model on standard video generation benchmarks. Our code and model weights are publicly available at https://github.com/showlab/Show-1.

Method

Pixel-based VDMs can generate motion accurately aligned with the textual prompt but typically demand expensive computational costs in terms of time and GPU memory, especially when generating high-resolution videos. Latent-based VDMs are more resource-efficient because they work in a reduced-dimension latent space.

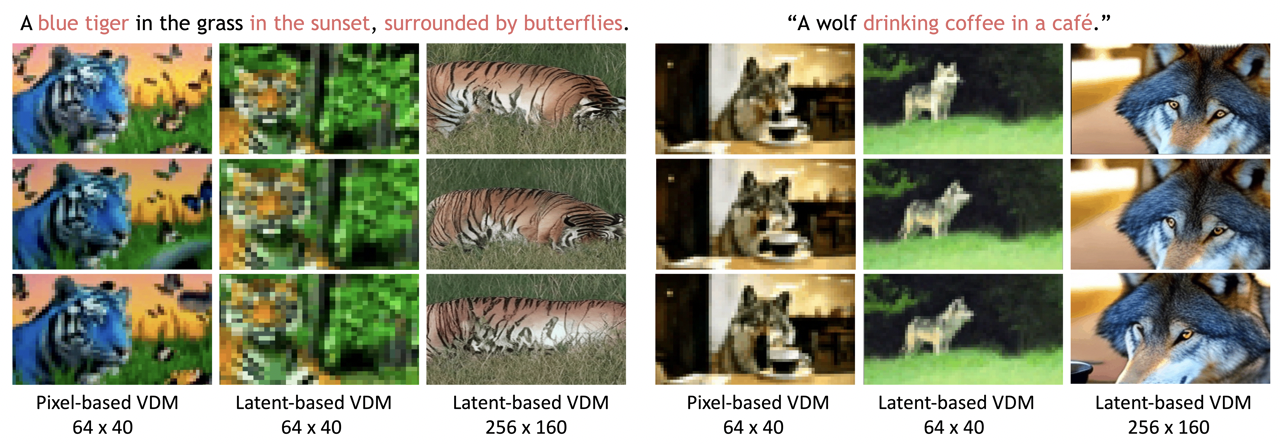

But it is challenging for such small latent space (e.g., 64×40 for 256×160 videos) to cover rich yet necessary visual semantic details as described by the textual prompt. Therefore, as shown in above figure, the generated videos often are not well-aligned with the textual prompts. On the other hand, if the generated videos are of relatively high resolution (e.g., 256×160 videos), the latent model will focus more on spatial appearance but may also ignore the text-video alignment.

To marry the strength and alleviate the weakness of pixel-based and latent-based VDMs, we introduce Show-1, an efficient text-to-video model that generates videos of not only decent video-text alignment but also high visual quality.